Deep Learning

ICLR 2016 (International Conference on Learning Representations)Deep Learning: What's hot, what's hype and what can we use?

What is deep learning?

and what is it not?

DL is a subset of machine learning.

DL is not a SVM, random forest, single layer HMM, ...

DL is defined by multiple stacks of layers (deep)

Why so hot?

DL is exploding in terms of publications and use-cases.

It works ... image recognition, captioning, robotic control, text synthesis, language generation, audio encoding, ...

Major investment by Google, Facebook, Twitter, Intel, Baidu, Amazon, Adobe, Oracle, IBM Watson, ...

Why now?

Datasets

Hardware: GPU (nVidia)

Where does deep learning fit

into portfolio analysis?

Overlap of Natural Language Processing & Deep Learning...

Interpreting human language:

tokenization, POS tagging, NE recognition, dependency parsing, ...

Learned representations

word (word2vec), sentence, paraphrase, document, ...

Applied deep learning

supervised tasks, clustering, similarity tests, document summarization, annotating / attention mechanisms, discovery, and outlier detection.

International Conference

on Learning Representations

San Juan, Puerto Rico, 2016

Yoshua Bengio

U. Montreal, CS (22,000 citations)

Yann Lecun

Facebook, AI Director (developed CovNets, major OCR work)

Common patterns

It's all about the architecture!

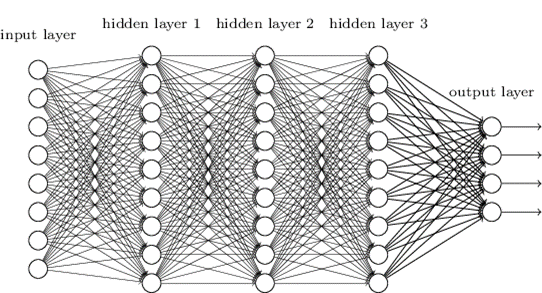

MLP: Multilayer Perceptron

RNN: Recurrent Neural Networks

CNN: Convolutional Neural Network

VAE: Varational AutoEncoders

GAE: Generative Adversarial Networks

MLP: Multilayer Perceptron

Basic idea of most neural networksKey points: Activation functions, Layers, Backpropagation,

Activation:

RNN: Recurrent Neural Networks

Network maintains some sense of "state"

Trained on text, RNN's can reproduce passages, punctuation and style

PANDARUS:

Alas, I think he shall be come approached and the day

When little srain would be attain'd into being never fed,

And who is but a chain and subjects of his death,

I should not sleep.

Second Senator:

They are away this miseries, produced upon my soul,

Breaking and strongly should be buried, when I perish

The earth and thoughts of many states.Or titles of scientific articles (high-energy physics):

Search for CP-Violation in Right-Handed Neutrinos

Neutron Star Propagation as a Source of the Hadron-Hadron Scattering Measurement at the Planck Scale

Goldstone bosons and scattering modes from two-dimensional Hamiltonians

Baryogenesis with Yukawa Unified SUSY GUTs

Radial scattering and dense quark matter and chiral phase transition of a pseudoscalar meson at finite baryon density

Transition Form Factor Constant in a Quark-Diquark Model

A New Theory of Supersymmetry Breaking

Effective gluon propagator in QCD at zero and finite temperature and and dual gauge QCD in the BCS-BCS limit

Light scalar pair signals in top quark multiple polarizatino

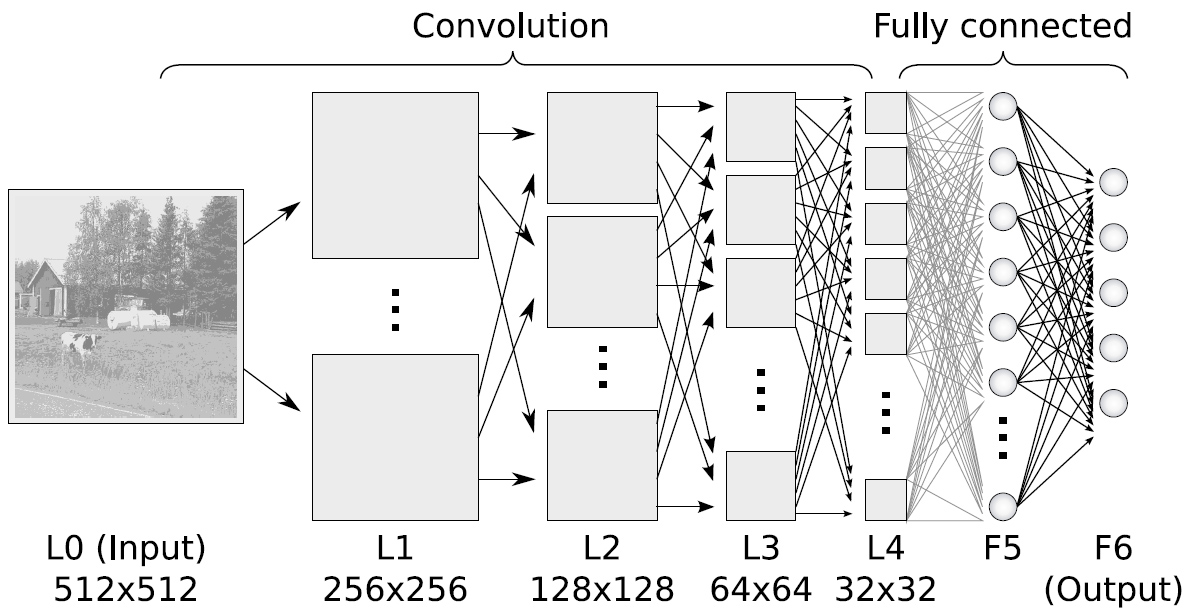

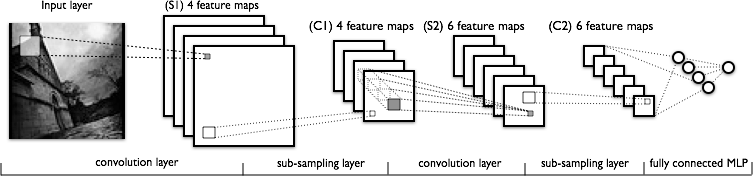

CNN: Convolutional Neural Network

Sparse / shared weights weights

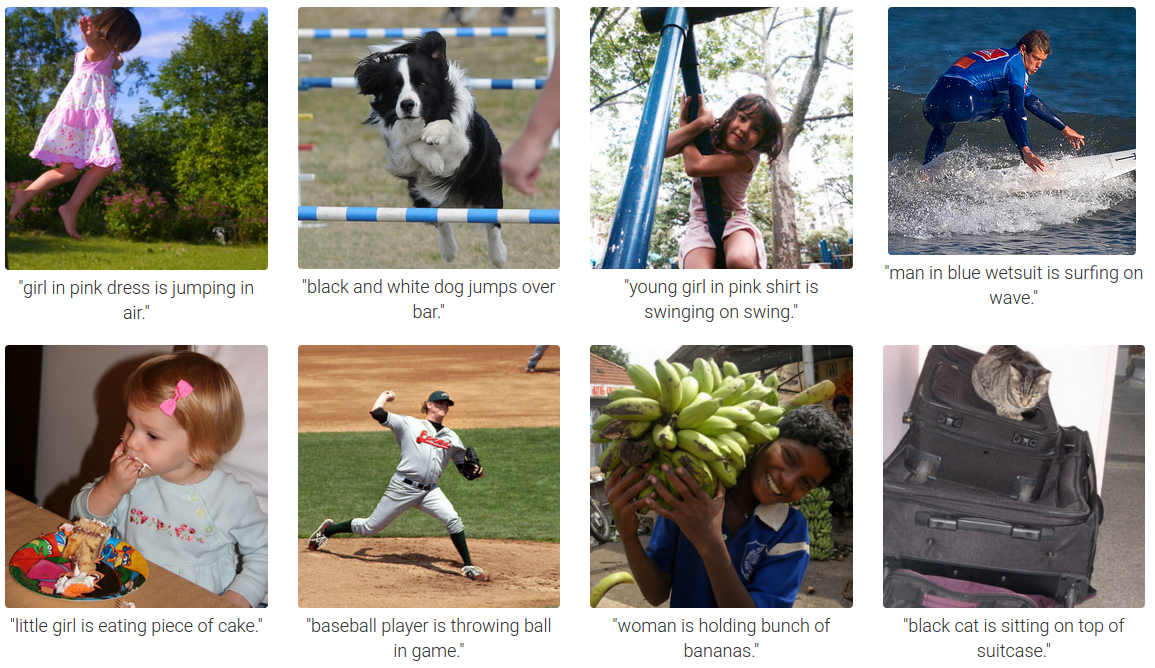

CNN + RNN: Image captioning

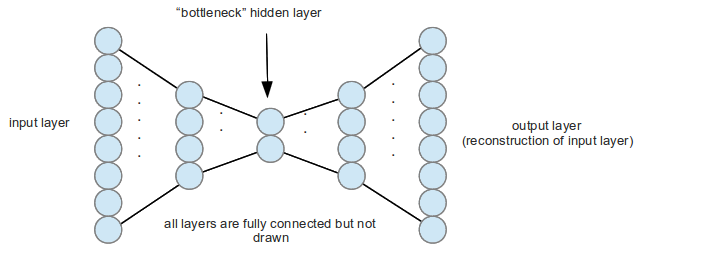

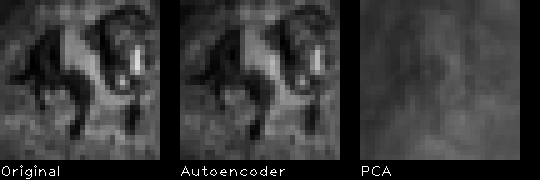

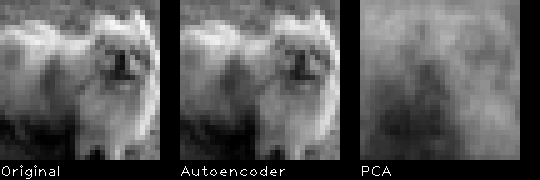

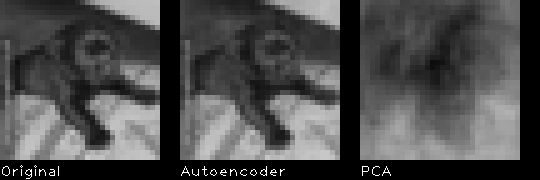

Autoencoders

Train the network to reproduce the input

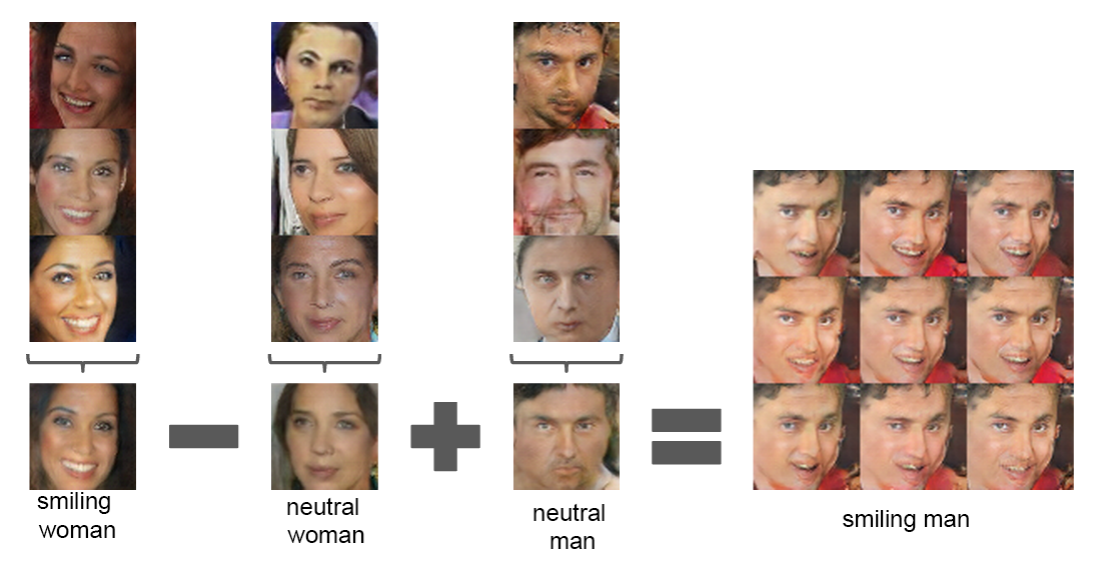

GAE: Generative Adversarial Networks

Deep networks can be overtrained and brittleTrain two networks, one generates, the other discriminates

GAE + Autoencoder

Not covered here

Deep Robotic Learning

More topics not covered (but very interesting!)

BlackOut: Speeding up Recurrent Neural Network Language Models With Very Large Vocabularies

Guaranteed Non-convex Learning Algorithms through Tensor Factorization

Convergent Learning: Do different neural networks learn the same representations?

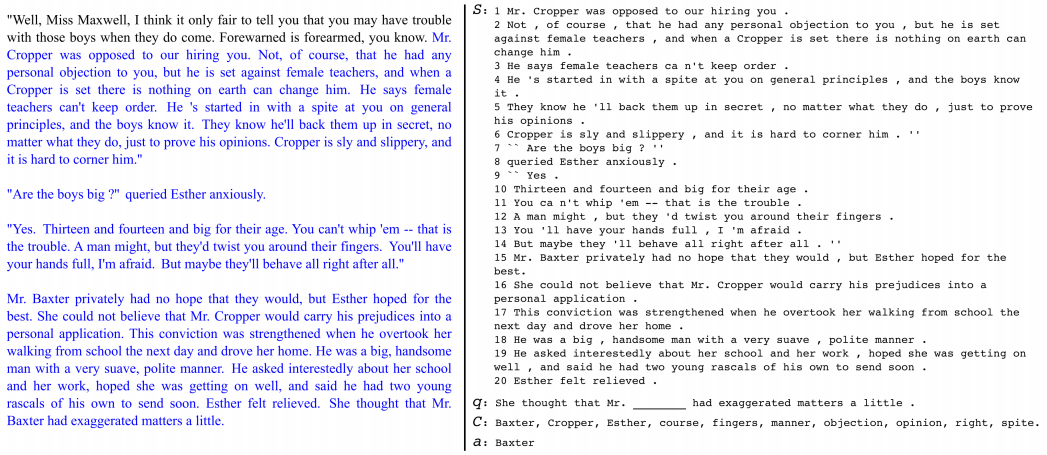

The Goldilocks Principle

Reading Children's Books with Explicit Memory RepresentationsBuilt a corpus of children's books with attention based questions

Since humans need context to solve Q&A, train attention mechanisms!

Towards Universal Paraphrastic Sentence Embeddings, arXiv

Tested sentence similarity, entailment, and sentiment classification.An example of a positive TE (text entails hypothesis):

text: If you help the needy, God will reward you.

hypothesis: Giving money to a poor man has good consequences.An example of a negative TE (text contradicts hypothesis):

text: If you help the needy, God will reward you.

hypothesis: Giving money to a poor man has no consequences.An example of a non-TE (text does not entail nor contradict):

text: If you help the needy, God will reward you.

hypothesis: Giving money to a poor man will make you a better person.

The Variational Fair Autoencoder, arXiv

"... learning representations that are invariant to certain nuisance or sensitive factors of variation in the data while retaining as much of the remaining information as possible. Our model is based on a variational autoencoding architecture with priors that encourage independence between sensitive and latent factors of variation."

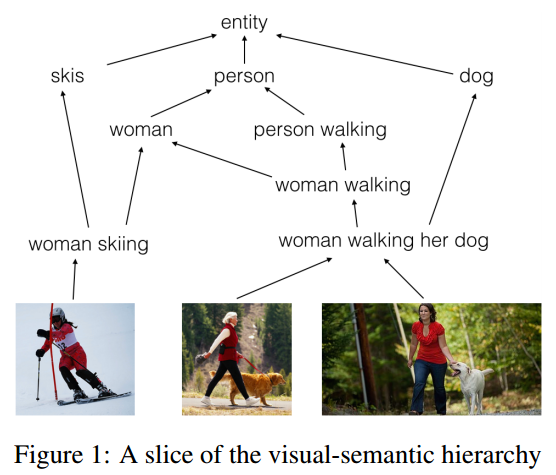

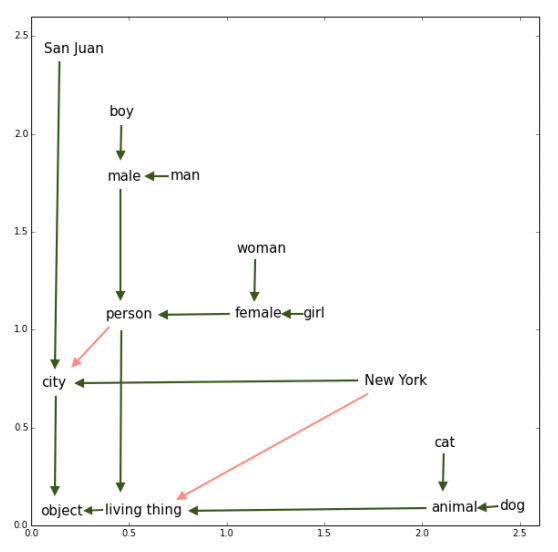

Order-Embeddings of

Images and Language, arXiv

" Hypernymy, textual entailment, and image captioning can be seen as special cases of a single visual-semantic hierarchy over words, sentences, and images. In this paper we advocate for explicitly modeling the partial order structure of this hierarchy..."

Multi-layer Representation Learning

for Medical Concepts, arXiv

In Electronic Health Records the visit sequences of patients have multiple concepts (diagnosis, procedure, and medication codes) per visit. This structure provides two types of relational information, namely sequential order of visits and co-occurrence of the codes within each visit. Med2Vec learns distributed representations for both medical codes and visits from a large EHR dataset with over 3 million visits, and allows us to interpret the learned representations confirmed positively by clinical experts.

Thank you