Deep Optimizers

What is an optimizer?

There are lots of them! Gradient Descent, Adam, RMS Prop, Momentum, Adagrad...

Used to solve problems in deep learning. In general, can solve any problem with a gradient.

What other problems?

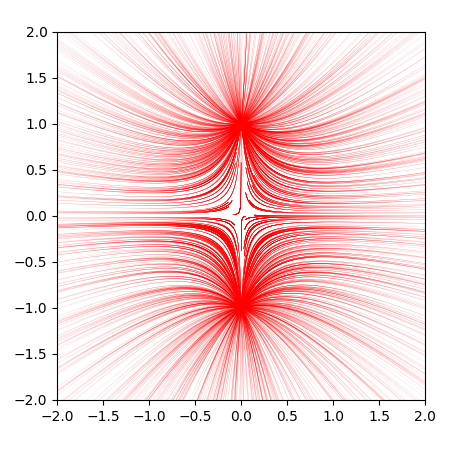

Solve for x

, get two roots

i, -i

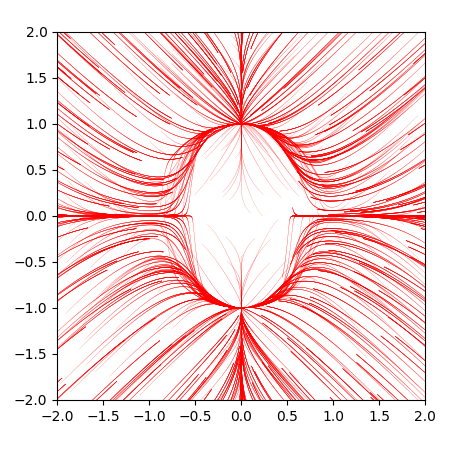

Solve for x

, get three roots...

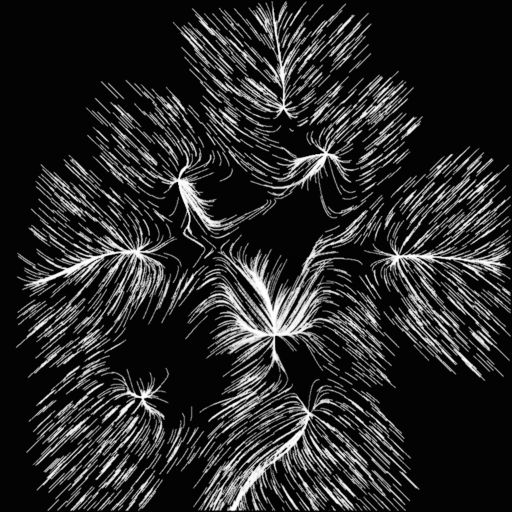

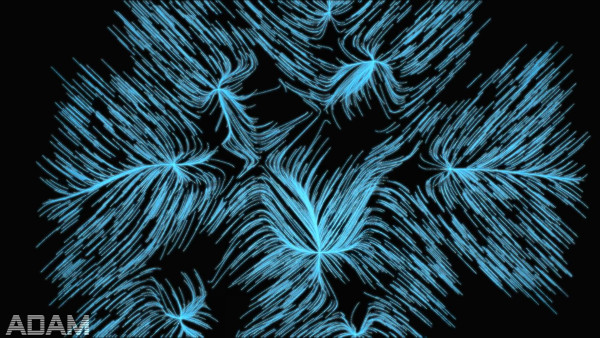

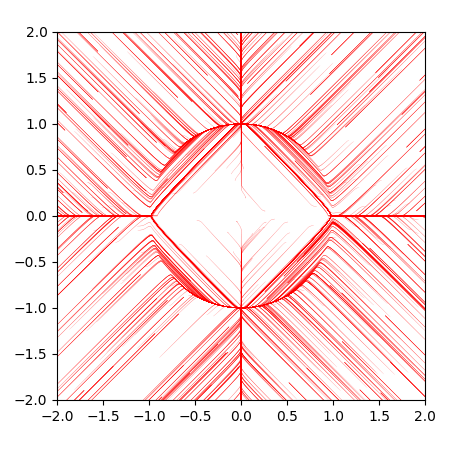

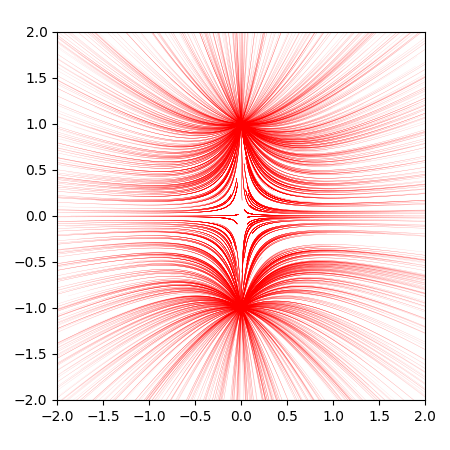

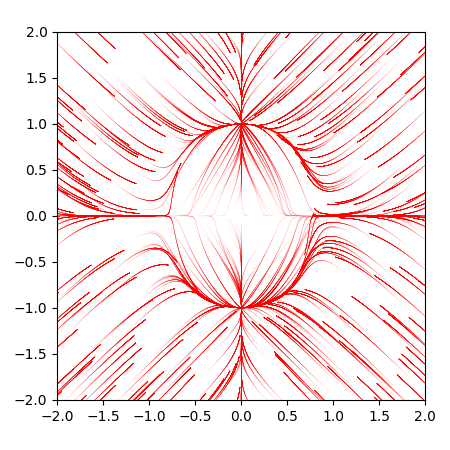

Shape of convergence depends on the optimizer!

Gradient Descent, ADAM, RMS Prop, Momentum

Proximal AdaGrad, Proximal Gradient Descent, Adagrad, FTRL

cv2

and image processing tricks